Custom Input Devices

Omegalib provides support for different types of user input devices such as 3D mice, game controllers and motion capture tracking systems. This functionality is provided through the use of the Virtual Reality Peripheral Network (VRPN). Using alternative input devices can provide a more natural means for users to interact with a data visualisation by removing restrictions on inputs that would otherwise be imposed by a regular keyboard and mouse.

We have developed a simple input device framework which builds upon the features provided by VRPN and Omegalib, called daInput. This framework simplifies the process of adding support for custom input devices to an Omegalib visualisation. In this tutorial, we will examine the capabilities of daInput.

daInput

The daInput Omegalib module includes support for the following features and functionality:

- Reusable abstractions for different types of user input device

- Customisable on-screen cursor appearance and geometry

- Complete compatibility with daHandles, permitting you to use custom input devices to interact with handles exposed by objects within a visualisation

The module includes some example cursor implementations, which you may reuse in your Omegalib scripts, or use as examples to guide the development of new controls:

- A simple 2D pointer cursor, used to support inputs from a mouse

- A controller cursor, implementing support for 3DConnexion Space Navigator devices

- A mocap cursor, implementing support for OptiTrack motion capture system markers

- Example 3D cursor geometry builders and mocap device input mappings for the Data Arena

The steps needed to add support for a custom cursor to your Omegalib script are simple and straightforward. This tutorial contains examples demonstrating how to add support for each of the input devices listed above.

Each of the following examples assume that the daInput module has been imported into your Omegalib script using the import statement shown below:

from daInput import *

Mouse Pointer Example

Adding support for a mouse pointer is very easy, all that is required is to define a UiContext instance in your script, and the pointer will be configured and made available by default:

ui_context = UiContext()

Pass the context into the daHandles SelectionManager as shown in the previous section in order to allow your pointer to interact with any handles defined on your visualisation objects.

SpaceNav Controller Example

The code snippet shown below demonstrates how to add support for input from a spacenav controller:

ui_context = UiContext()

ui_context.add_cursor(SpaceNavControllerCursor('spacenav', 0, TriAxisCursorGeometryBuilder().set_position(0, 2, -4).build(), ui_context))

Here, we create a UiContext as before, and define a SpaceNavControllerCursor which we add to the context. The cursor receives a name, id, and the geometry to use to represent the position of the cursor within an Omegalib scene (in this example, we build a 3 axis cross-hair as shown in the image above) along with a reference to the context itself.

OptiTrack Tracker Example

This final code snippet shows how to add support for input from multiple mocap tracking markers:

mapping = DataArenaSquareMocapMapping()

ui_context = UiContext()

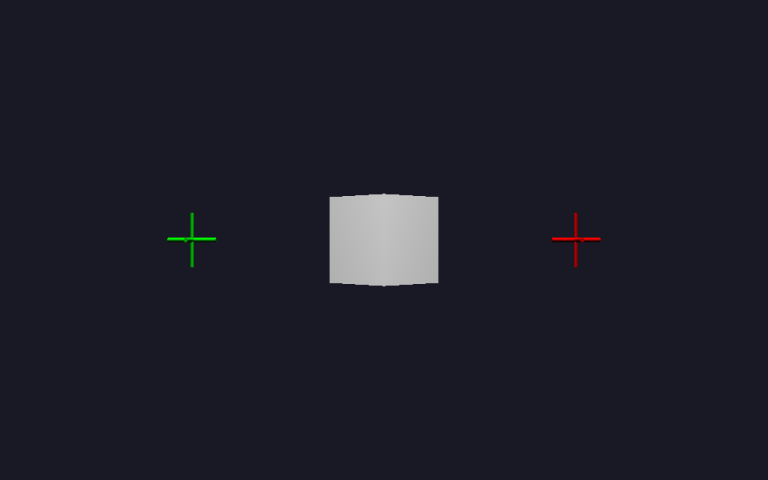

ui_context.add_cursor(OptiTrackMocapCursor('optitrack_1', 1, TriAxisCursorGeometryBuilder().set_name('cursor_1').set_effect('colored -d red').set_position(0, 2, -4).build(), mapping, ui_context))

ui_context.add_cursor(OptiTrackMocapCursor('optitrack_2', 2, TriAxisCursorGeometryBuilder().set_name('cursor_2').set_effect('colored -d green').set_position(0, 1, -4).build(), mapping, ui_context))

In this example, we first of all define a mapping to convert raw input from the motion capture markers into the co-ordinate space of the Data Arena. We then define a UiContext as usual, and set up two mocap cursors to provide input. Each cursor has a name, id and geometry as before. The id assigned to each cursor should correspond to the user id assigned to each mocap marker in the Omegalib configuration settings. This allows daInput to match up input events to the device which generated them. Finally, we use the tri-axis geometry once again, to represent each input device within the scene, but assign different colours to allow us to easily differentiate between the two.

If you need to interrogate a specific motion capture tracking marker to find out more information about its position within the Data Arena, or its normalised display coordinates, it is possible to do so using the following accessor methods:

# retrieve the origin of the cursor's coordinate space within the context of

# the coordinate system (or dimensions) of the mocap environment (e.g., the Data Arena)

cursor.get_origin()

# retrieve the current position of the cursor within a "uv" coordinate space which defines the

# total display area

cursor.get_coordinates()

Where to Next?

If you would like to dig deeper and learn more about how the daInput Omegalib python module works, or contribute updates of your own, please refer to the repository on GitLab, where you will be able to find all of the code:

Some possible future enhancements you may like to try to add include:

- Adding support for additional input devices (e.g. a game controller)

- Implementing alternative geometry builders to create custom 3D cursor objects of your own

- Experimenting with the implementation of a custom motion capture system mapping for the Data Arena, or your own visualisation system