| Year | 2022 |

| Credits | Rachel LandersDirector Marcus EckermannCinematographer Greg FerrisVisualisation Thomas RicciardielloDA Visualisation DeveloperTechnical Assistance & Control App Development Ben SimonsDA Technical DirectorTechnical Assistance |

| 3D Stereo | No |

| Tags | UE4 film photo shoot unreal engine virtual production |

Exploring Mawson

Exploring Mawson is an innovative hybrid documentary that uniquely ‘recasts history’ to retrospectively bring diversity to the pervading white, male narratives through which we currently understand the Australasian Antarctic Expedition (AAE) of 1911-1914 and broader Australian history. It is a prototype for a television series which recasts several key Australian histories and presents an exciting opportunity for actors of all races, ethnicities, abilities and genders, to play a role in revisiting iconic Australian stories with modern sensibility and representation.

The Data Arena was used for a prototype shoot in late November 2021 to explore the theatre’s unique virtual production possibilities. Using Unreal Engine 4, interior and exterior scenes of Mawson’s Hut were created with real-time lighting and atmospheric effects. Amongst the many objects inside Mawson’s Hut was a virtual gas lamp, with a UE Point Light actor tracked via our Optitrack Motion Capture system, allowing Mawson to walk around the virtual environment with live updates to light and shadows beyond the screen.

Virtual Production in the Data Arena

Virtual Production replaces the traditional on-set green-screen background with a dynamic computer graphics image (CG). It eliminates the post-production step which would replace the green pixels with a matte (image). The final background is there on-set, captured in-camera. The Data Arena screen creates a virtual set extension, which everyone can see on the day of recording.

Notice there are actual lights on-set which light the actors & props. The CG background (Mawson's Hut) has virtual lights, which can be adjusted in realtime. The actual & virtual lights are adjusted match - in position, brightness, and colour temperature, to achieve a particular look. Controls for the virtual lights are described below.

Full shoot credits

| Name | Role |

|---|---|

| Rachel Landers | Director |

| Dylan Blowen | Producer/Sound Recordist/B Camera op |

| Emmeline Dulhunty | Production Designer |

| Amy Duloy | Art Dept Assistant |

| Marcus Eckermann | Cinematographer |

| Tahlia Magistrale | Asst. Camera |

| Claire Cooper-Southam | C Camera Operator |

| Greg Ferris | Visualization |

| Liam Branagan | 1st AD |

| Bailey Pulepule | Associate Producer/Set Covid Safety Officer |

| Luke Cornish | Editor |

| Hayden Jones | Stand-In - MAWSON |

| India Wagner | Cast PA/Covid Safety Officer |

| Royang Huangfu Murray | Sound Assist |

| Alex Roberts | Sound Assist |

| Geoff Reid | Sound Assist |

| Grace Kopsiaftas | Runner |

| Cato Lafas | Data Wrangler Thursday,Friday |

| India Wagner | Runner |

| Grace Rached | Runner |

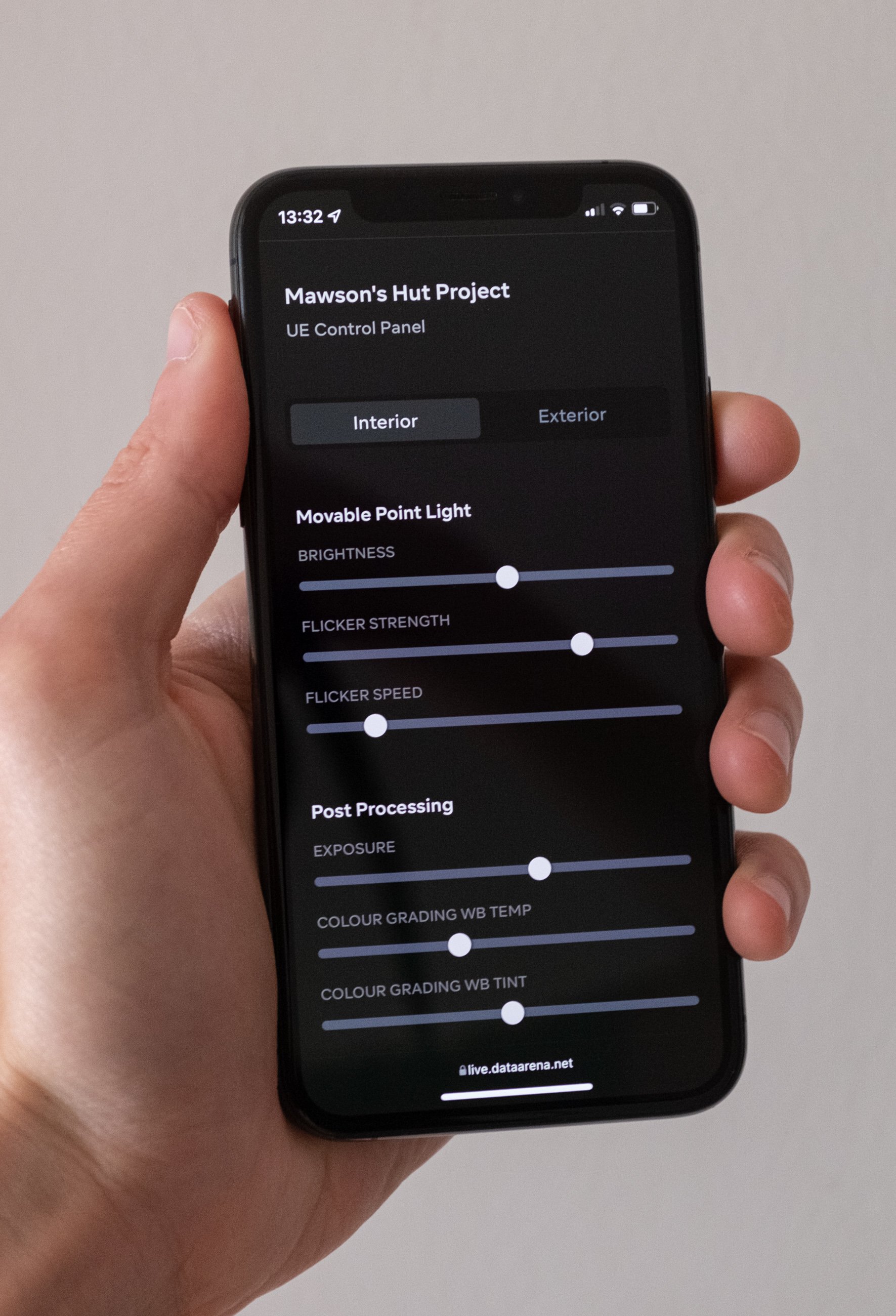

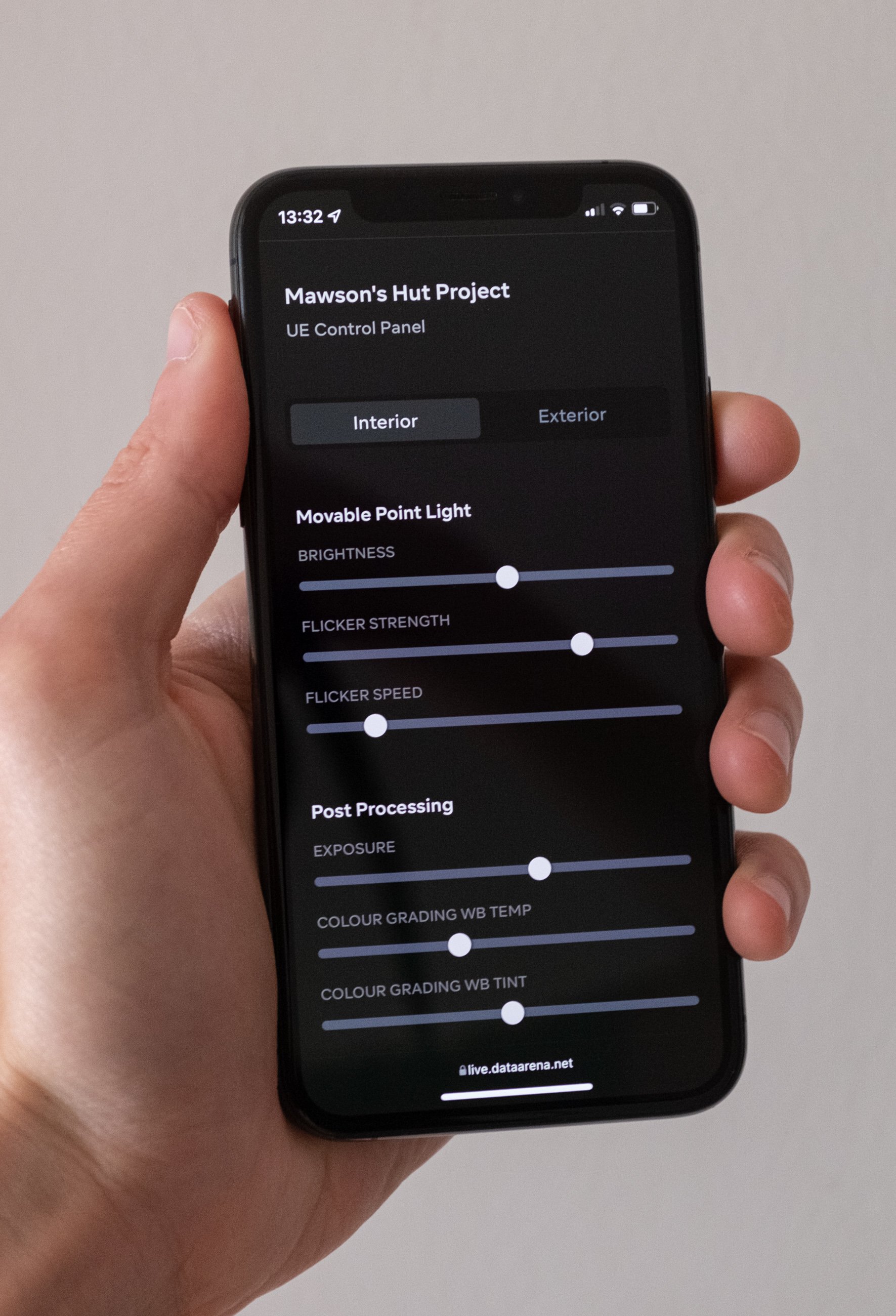

Control interface

A bespoke control interface was created to communicate to UE4 via a phone or tablet for live adjustments of virtual camera exposure, white balance and tint, amongst other scene controls.

The interface allows the user to change scenes (interior cabin, exterior view). Scene changes triggers the UE4 project to switch levels between interior and exterior views of Mawson's Hut. Control parameters for each scene are kept in seperate tabs.

The Progressive Web Application utilises SocketIO for message broadcasting with minimal latency and is able to scale to completely remote use-cases.

The GUI is implemented as MVC pattern, which allows multiple smartphones to connect, view current settings, and set values in a consistent manner. Any parameter change (made by one user) is instantly updated on all displays.

Lantern Tracking Test

Here's a quick Lantern Tracking test we made. In regular production, actors are lit with coloured lights to match the colour space of the background image.

For this test, Thomas is not lit properly. We just wanted to show the way the Lantern's motion interacts with the VP background - as if it was truely casting light into the scene.

The first video shows the motion interaction test.

The 2nd video shows what's going on.

There is a MoCap tracking marker stuck to the top of the Lantern. A battery-powered headlamp is applied with stickytape. The Lantern appears lit.

The 3D position of the Lantern is tracked in realtime by the Optitrack Motion Capture system (not in view). The live video projection in the background moves a CG light to the same place and follows the motion.

The real Lantern casts a light and shadow into the Virtual Scene.