9 Dec 2020 16:12

Developing multiple cursors for multiple users

One of our goals for the Data Arena is to design multi-user participatory experiences. As we develop Unreal Engine and integrate it into our cave environment, we've started to think about how we can let users interact with the scene together. We'd rather do without the standard keyboard and mouse input as this limits the amount of participants, and isn't an ideal experience for the Data Arena.

Instead, we're starting to think about how we can use some of the existing input devices we have working in the arena, including our Optitrack tracking markers (from our Optitrack Motion Capture system), and other VRPN devices such as the 3D Connexion SpaceNav, Sony PlayStation controllers etc.

Before getting caught up in hardware input, we've started to think through how we can create multiple cursors in Unreal Engine through Blueprints. The goal of multiple users simultaneously interacting with a scene will require each to have their own independent cursor, so developing a Class Blueprint to spawn multiple "Cursor" objects made sense.

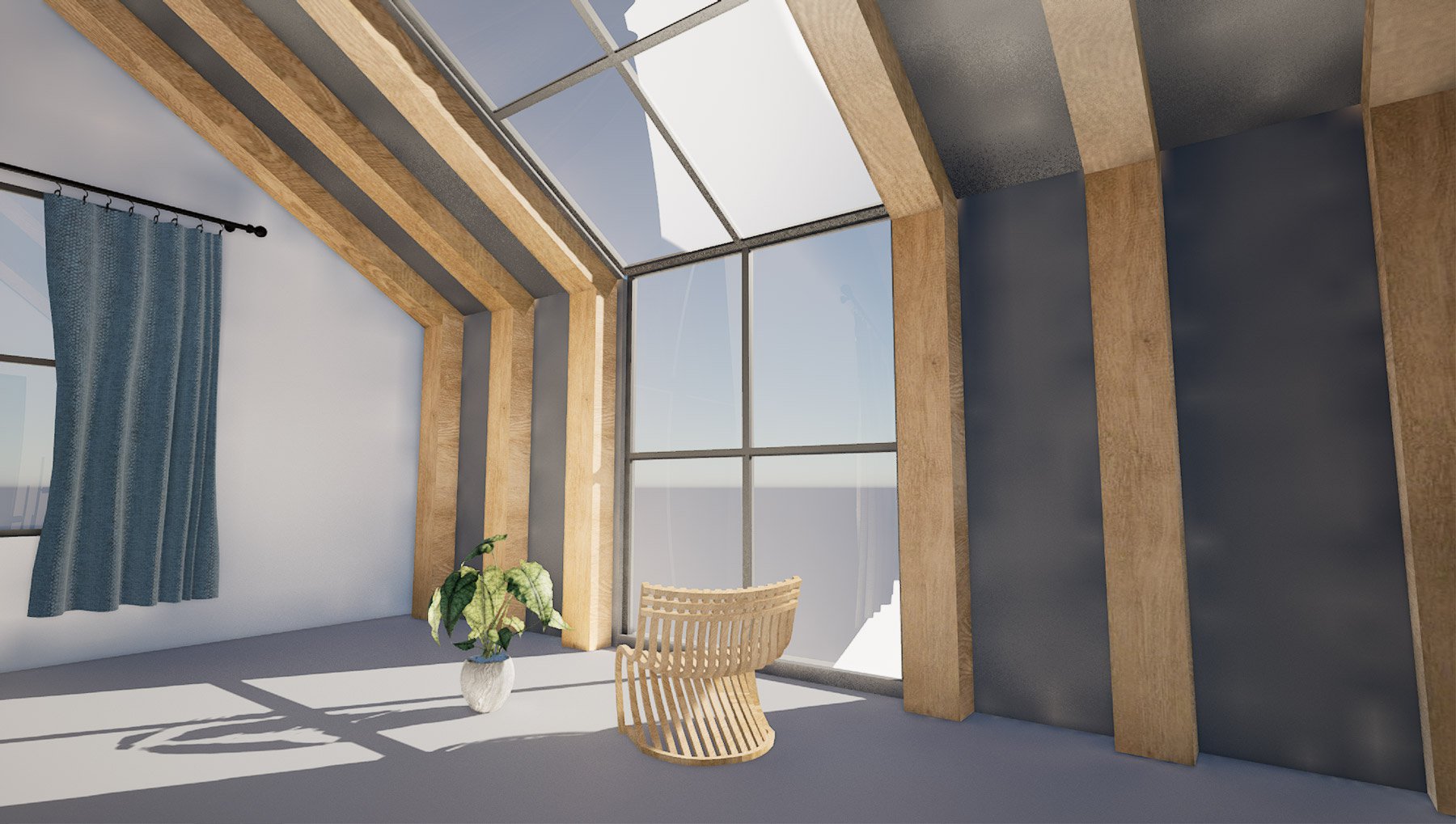

What you see below are cubes and chairs. Yes, they're just place-holder objects, while we develop multi-user manipulation. Later-on they'll be replaced with data-relevant objects, like bacteria cells.

1. Make a Cursor Widget

This first experiment (as seen in the previous post) from late July involved setting up a Widget which is Unreal Engine's way of creating a "heads up display" attached to the viewport. The pink square is in fact our first-pass at a cursor. It itself as a separate Widget and belongs to a custom Cursor object, responsible for keeping track of the screen position, and updating on key press events. As you might see, it's a bit off target.

2. Cursor Alignment

The cursor "off target" issue revealed a rabbit hole. After a lot of debugging and screen measuring, we found the red "hit" locations (nb. red line-trace visualisations in video) appeared exactly where the 2D vector coordinates of the cursor object said they should. E.g, at X: 50 and Y: 50. A red hit-mark appeared exactly there on screen, in the right spot. However the cursor icon was misplaced from that spot by an amount that changed. We discovered UE's DPI Scaling options were responsible for the misalignment.

By default, depending on the resolution of the game's viewport (which is always in flux in the editor), UE will scale the entire HUD viewport, which can cause a number of changes. This is a responsive design measure to guarantee consistency across the various destination platforms UE offers. When the Get Viewport Scale node reported a value over 1.0, the issue became clear. We adjusted the project's settings to maintain a viewport scale of 1.0.

Generally, this problem with "screen-position-measures" is solved when all (x,y) values are normalized to be between 0 and 1. That is, to use values where (0,0) is the top-left corner and (1,1) is the bottom-right. Then, conceptually, the middle of the screen is always (0.5, 0.5) - a value which is independent and unchanging when if the screen's actual pixel/resolution changes.

In the next video notice the (x,y) hit-position values, displayed on-screen, are not between 0 and 1. We see position values like 350 and 1450. Beware these are not NDC (Normalized Display Coordinates).

3. Another Cursor

While the custom cursor functionality was developed as a class blueprint, spawning multiple "cursors" into the scene wasn't as straightforward as we hoped and required a bit of blueprint redesigning (switching between "Actor" based blueprints and "Widget" based blueprints a few times). In the end we found a configuration that worked and were able to spawn multiple at once. There are just two in this scene, but it could theoretically scale up to any number.

4. Two Cursors Pickup Objects

The most exciting advance in this project was building in object pickup and drop functionality. This was far more simple than anticipated thanks to Unreal Engine's Physics Handle component, and YouTuber Dean Ashford's tutorial on grabbing objects. With a bit of tweaking to adapt to our custom cursors rather than the mouse position, we got this working fairly quickly.

5. Multiple Cursors Interact

The most recent state of the project with 3 Cursor objects spawned, each with a variable hand icon to represent the grabbing state, and a custom colour for identification. To optimise the project a bit further, these cursors were generated from a Data Table. See the images below for a breakdown of how this all came together with blueprints.